|

I am a Principal Research Scientist at Red Hat AI Innovation and MIT-IBM Watson AI Lab, working on Generative AI with a focus on efficient and controllable generation, particularly in diffusion models, LLMs and diffusion LLMs. I obtained my PhD in Computer Science from Rutgers University in 2024, advised by Prof. Dimitris Metaxas. During my PhD, I've spent time at Google Research, MIT-IBM Watson AI Lab, Snap Research, NEC Labs America, Tencent, and the Robotics Institute working as a research intern. Previously, I earned my master's degree from Carnegie Mellon University and my bachelor's from Chien-Shiung Wu College, Southeast University. Email: lastnamefirstname [at] gmail [dot] com or firstname.lastname [at] rutgers [dot] edu |

|

|

|

| 11-2025 | Two papers accepted to AAAI-2026 as Oral Presentations! |

| 11-2025 | APEER won the Best Paper Award at the 2025 ACM Web Conference Workshop! |

| 09-2025 | Two papers accepted to NeurIPS-2025! |

| 08-2025 | Hopscotch accepted to EMNLP-2025! |

| 07-2025 | SQuat accepted to COLM-2025! |

| 02-2025 | Two papers accepted to CVPR-2025! |

| 01-2025 | Three papers accepted to ICLR-2025! |

| 10-2024 | One paper accepted to WACV-2025! |

| 10-2024 | One paper accepted to NeurIPS-2024! |

| 02-2024 | One paper accepted to CVPR-2024! |

| 10-2023 | Two papers accepted to WACV-2024! |

| 09-2023 | One paper accepted to NeurIPS-2023! |

| 07-2023 | One paper accepted to ICCV-2023! |

| 06-2023 | One paper accepted to MICCAI-2023! |

| 06-2023 | One paper accepted to TMLR! |

| 03-2023 | Our paper Constructive Assimilation is accepted at GCV-2023. |

| 02-2023 | Two papers accepted to CVPR-2023! |

|

Selected publications are highlighted. (* equal contribution, † corresponding author) |

|

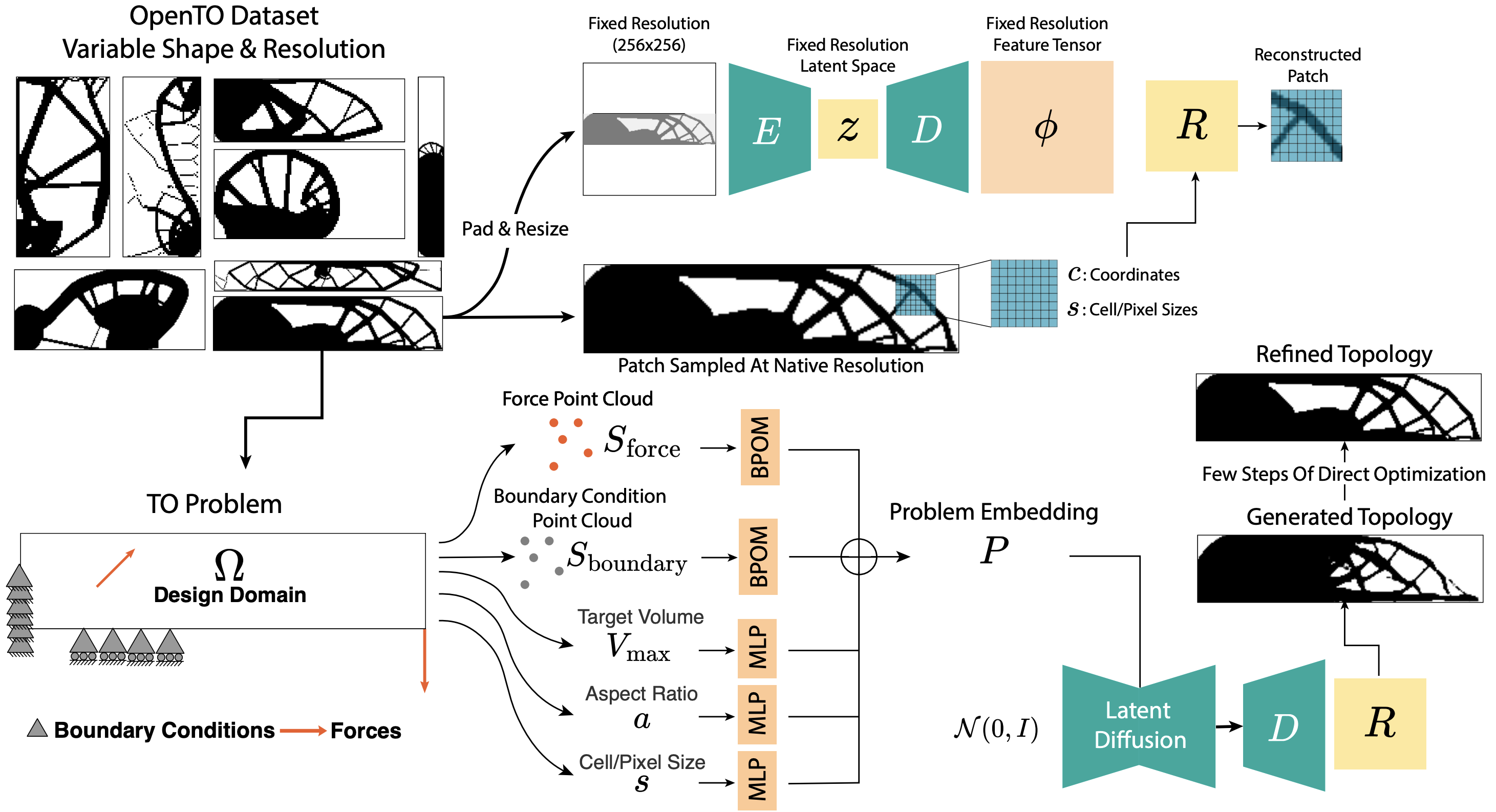

Amin Heyrani Nobari, Lyle Regenwetter, Cyril Picard, Ligong Han, Faez Ahmed. Accepted at Thirty-ninth Conference on Neural Information Processing Systems (NeurIPS), 2025 [arXiv] [Github] [bibtex] |

|

Haizhou Shi, Yibin Wang, Ligong Han, Huan Zhang, Hao Wang. Accepted at Thirty-ninth Conference on Neural Information Processing Systems (NeurIPS), 2025 [arXiv] [Github] [bibtex] |

|

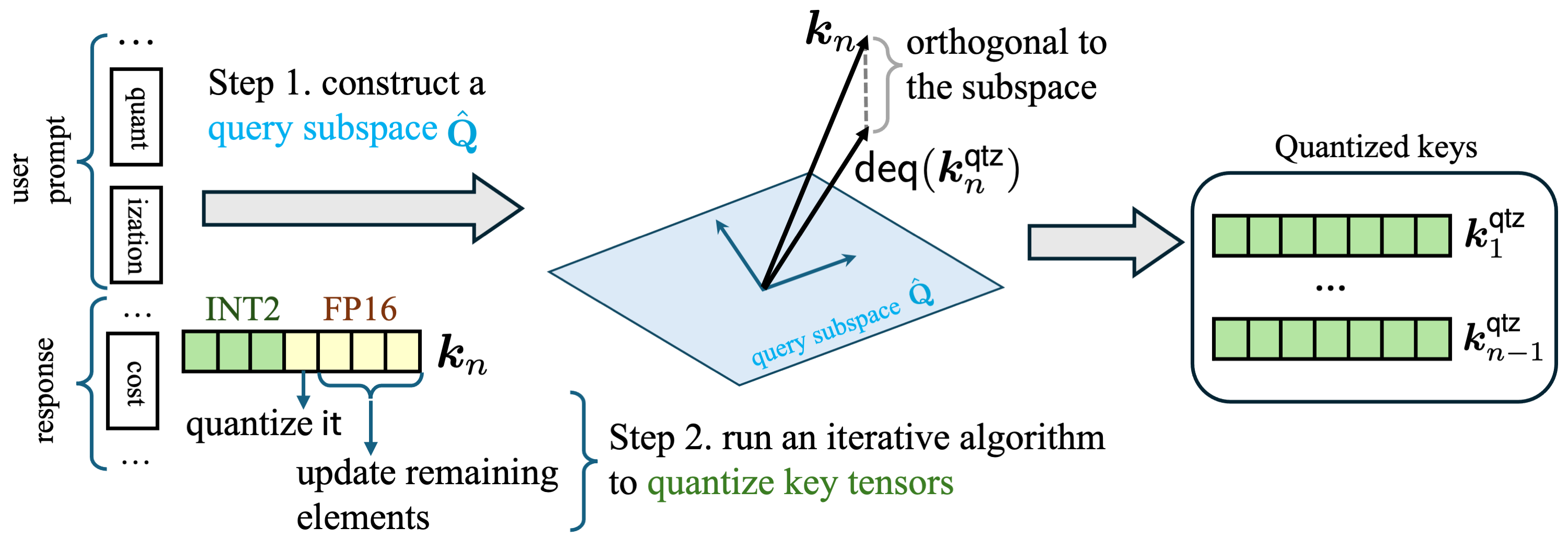

Hao Wang*, Ligong Han*, Kai Xu, Akash Srivastava. Accepted at Conference on Language Modeling (COLM), 2025 [arXiv] [Github] [bibtex] |

|

Can Jin, Mingyu Zhao, Shiyu Zhao, Zhenting Wang, Xiaoxiao He, Ligong Han, Tong Che, Dimitris Metaxas. Accepted to International Conference on Learning Representations (ICLR), 2025 [arXiv] [Github] [bibtex] |

|

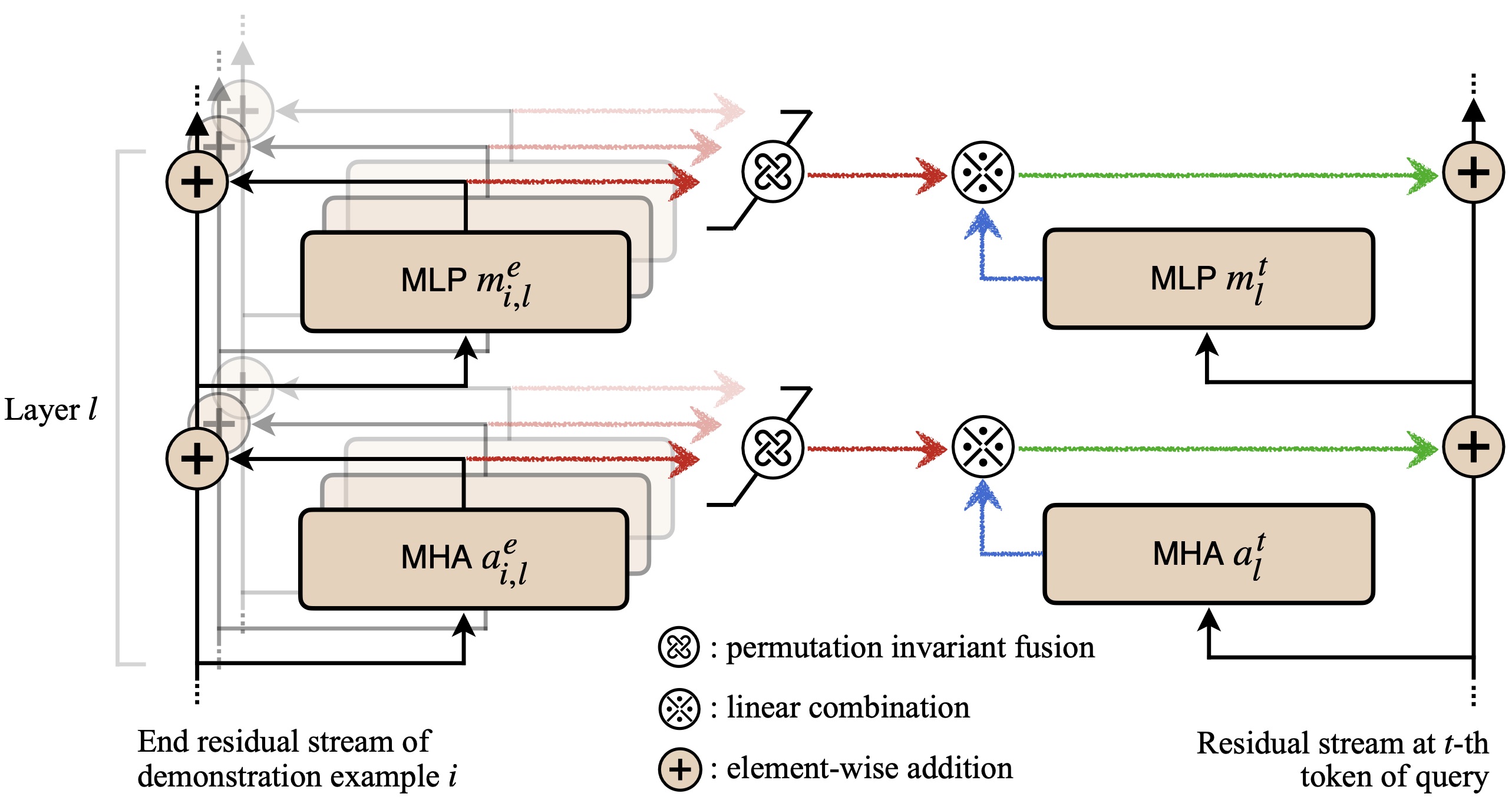

Aldo Pareja, Nikhil Shivakumar Nayak, Hao Wang, Krishnateja Killamsetty, Shivchander Sudalairaj, Wenlong Zhao, Seungwook Han, Abhishek Bhandwaldar, Guangxuan Xu, Kai Xu, Ligong Han, Luke Inglis, Akash Srivastava. Accepted to International Conference on Learning Representations (ICLR), 2025 [arXiv] [InstructLab] [bibtex] |

|

Xiaoxiao He, Ligong Han†, Quan Dao, Song Wen, Minhao Bai, Di Liu, Han Zhang, Martin Renqiang Min, Juefei Xu, Chaowei Tan, Bo Liu, Kang Li, Hongdong Li, Junzhou Huang, Faez Ahmed, Akash Srivastava, Dimitris Metaxas. Accepted to Winter Conference on Applications of Computer Vision (WACV), 2026 [arXiv] [Project Page] [bibtex] |

|

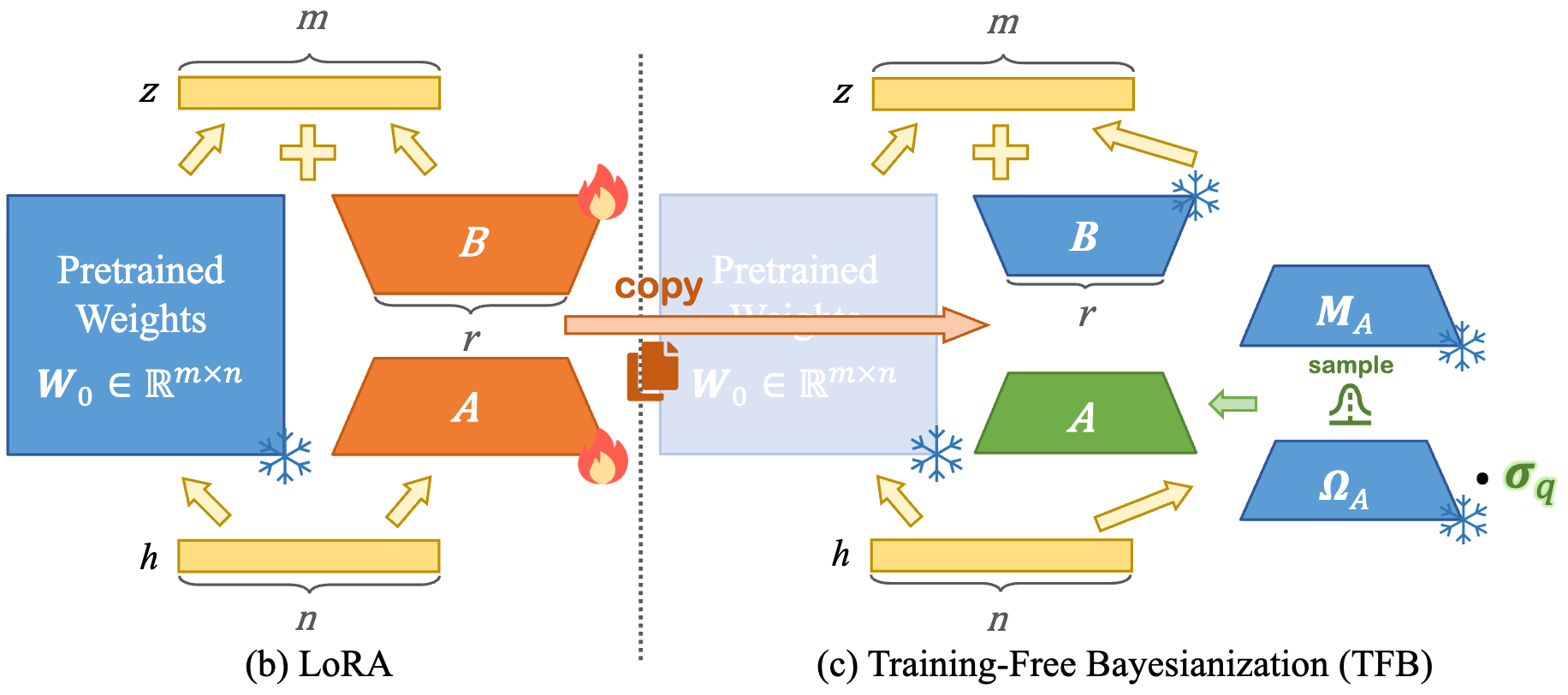

Yibin Wang*, Haizhou Shi*, Ligong Han, Dimitris Metaxas, Hao Wang. Accepted at Thirty-eighth Conference on Neural Information Processing Systems (NeurIPS), 2024 [arXiv] [bibtex] |

|

Xinxi Zhang*, Song Wen*, Ligong Han*†, Juefei Xu, Akash Srivastava, Junzhou Huang, Hao Wang, Molei Tao, Dimitris Metaxas. Accepted at Winter Conference on Applications of Computer Vision (WACV), 2025 [arXiv] [Github] [bibtex] |

|

Zhuowei Li, Zihao Xu, Ligong Han, Yunhe Gao, Song Wen, Di Liu, Hao Wang, Dimitris Metaxas. Accepted to International Conference on Learning Representations (ICLR), 2025 [arXiv] [Project Page] [Github] [bibtex] |

|

Anastasis Stathopoulos, Ligong Han, Dimitris Metaxas. Accepted to Conference on Computer Vision and Pattern Recognition (CVPR), 2024 [arXiv] [Project Page] [Github] [bibtex] |

|

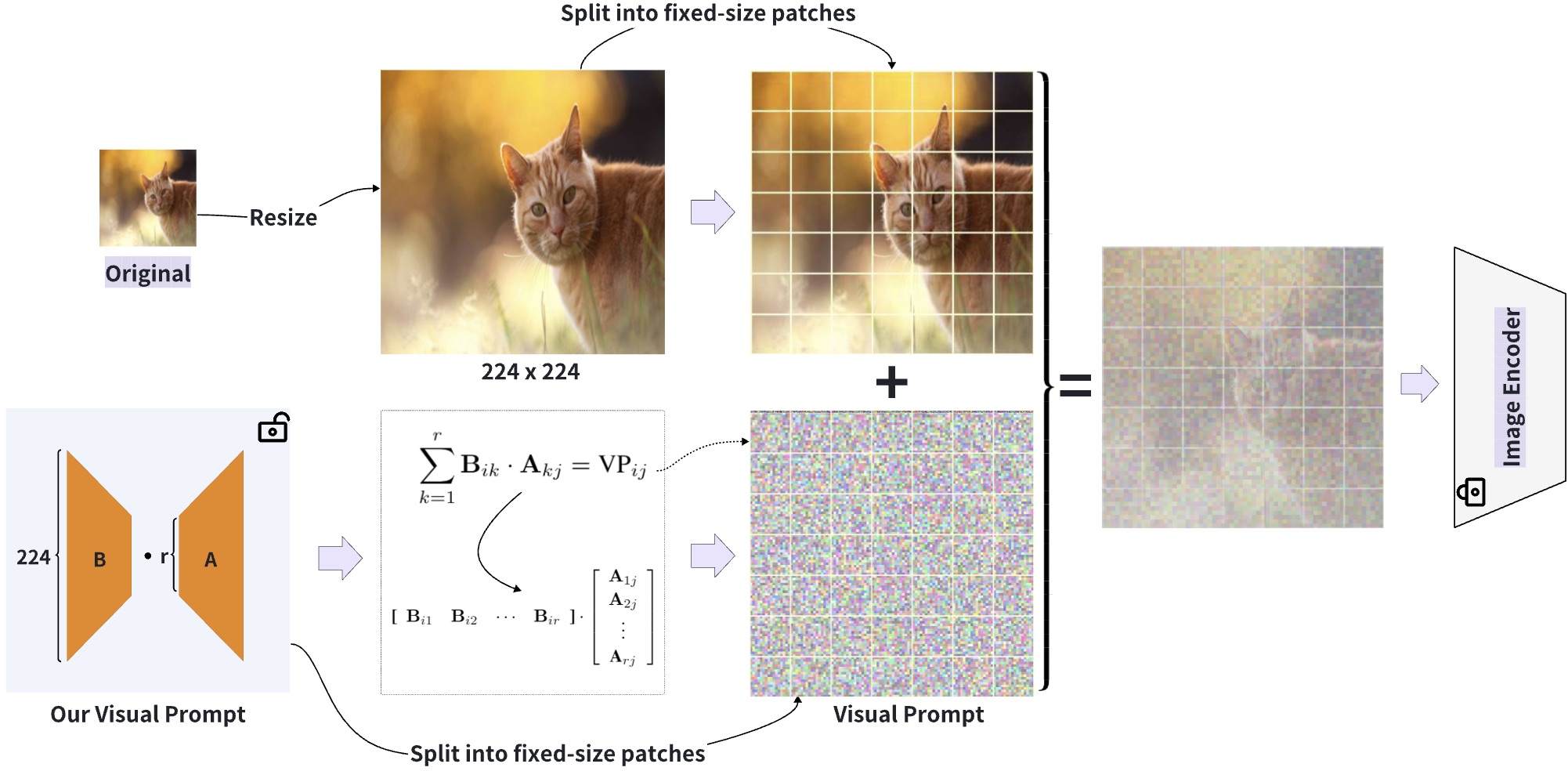

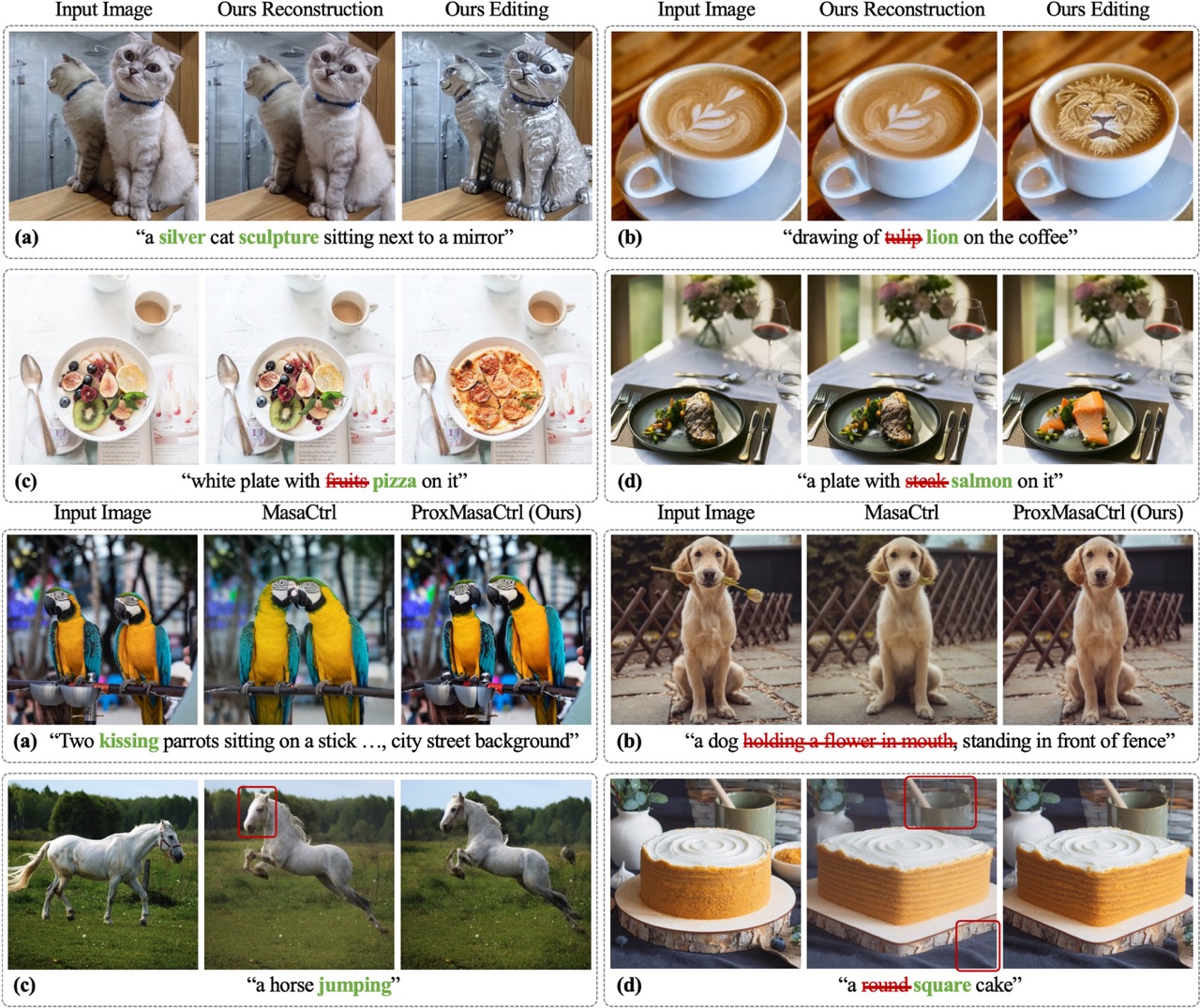

Ligong Han†, Song Wen, Qi Chen, Zhixing Zhang, Kunpeng Song, Mengwei Ren, Ruijiang Gao, Yuxiao Chen, Di Liu, Qilong Zhangli, Anastasis Stathopoulos, Jindong Jiang, Zhaoyang Xia, Akash Srivastava, Dimitris Metaxas. Accepted at Winter Conference on Applications of Computer Vision (WACV), 2024 [arXiv] [poster] [Github] [bibtex] |

|

Qi Chen, Changjian Shui, Ligong Han, Mario Marchand. Accepted at Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS), 2023 [arXiv] [poster] [Github] [bibtex] |

|

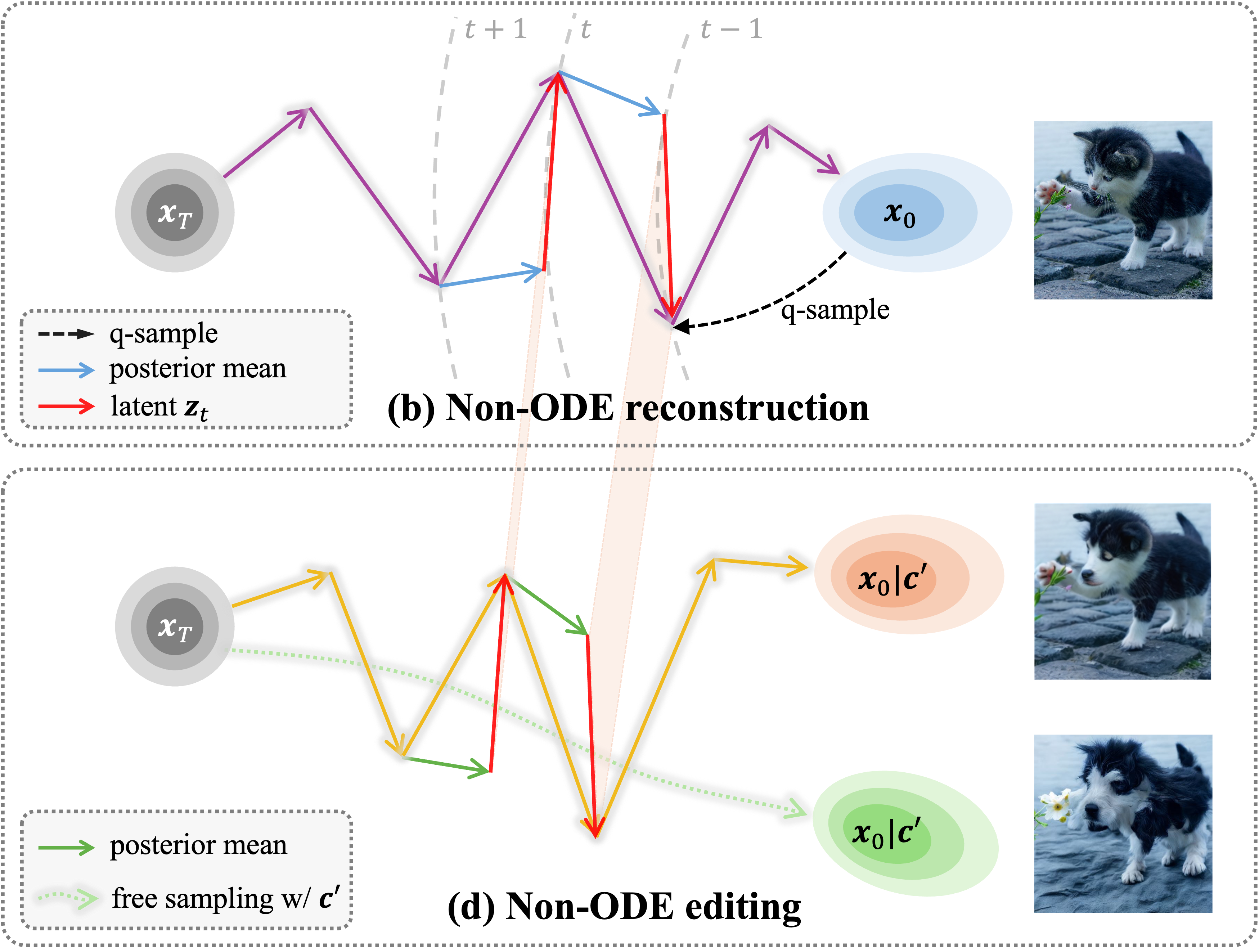

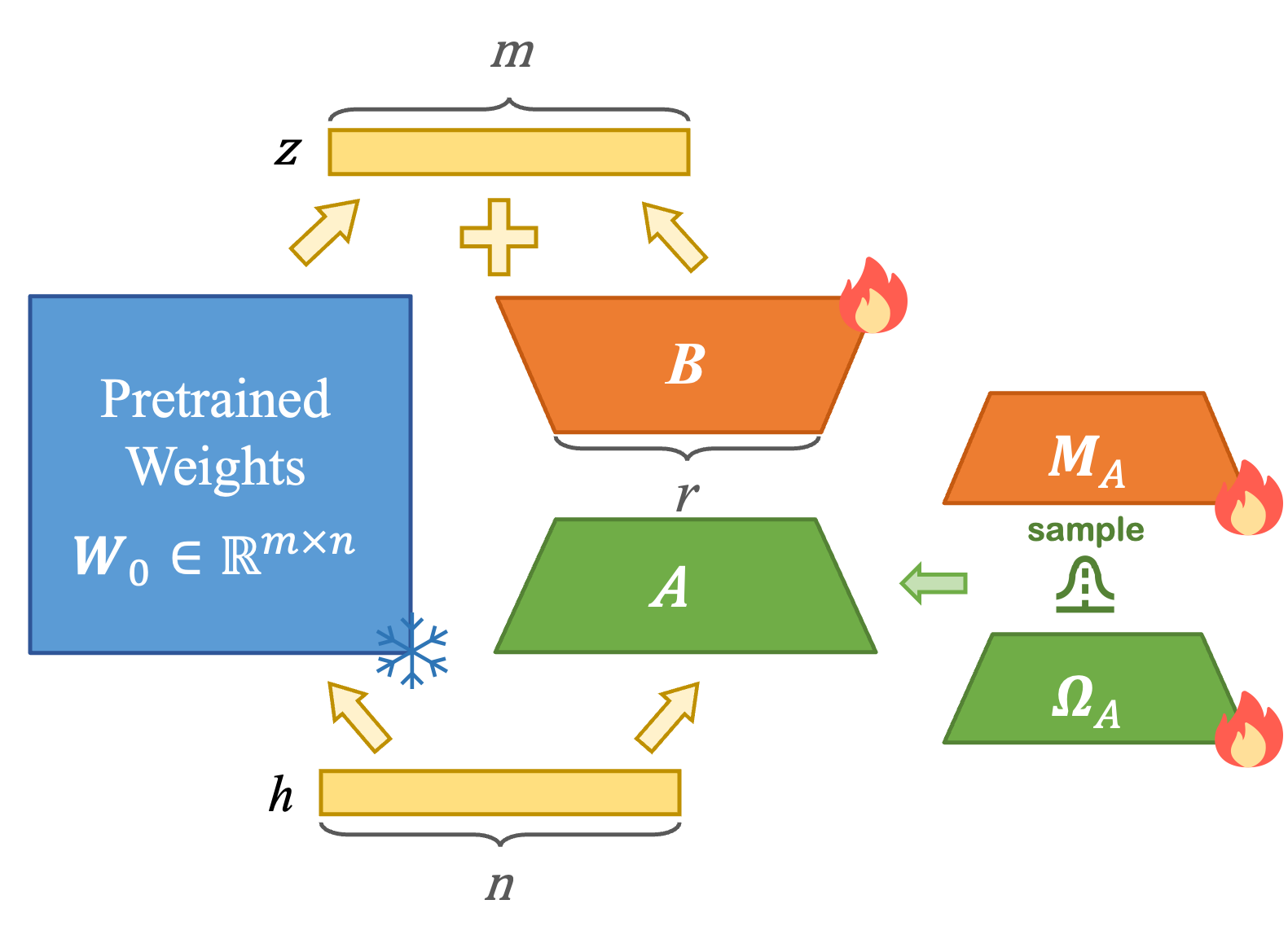

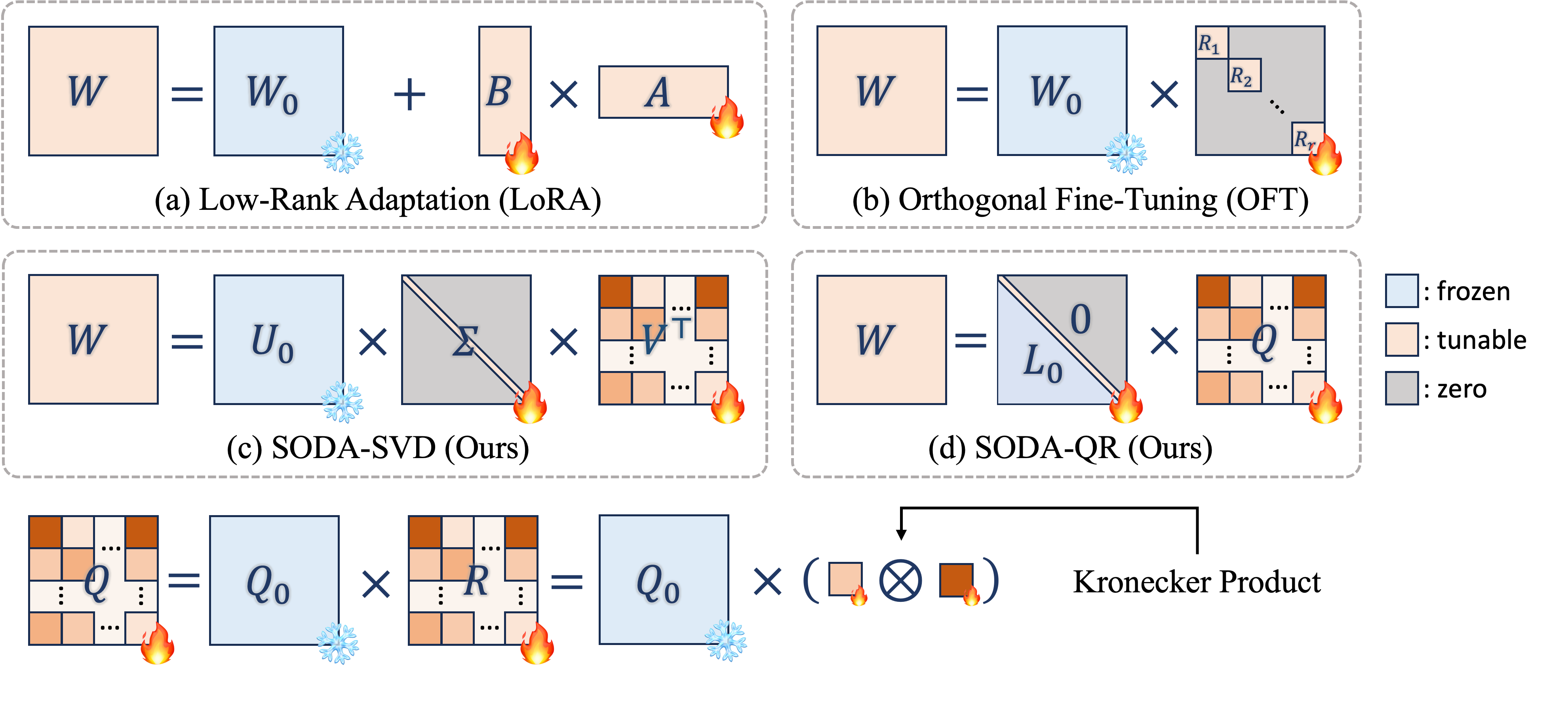

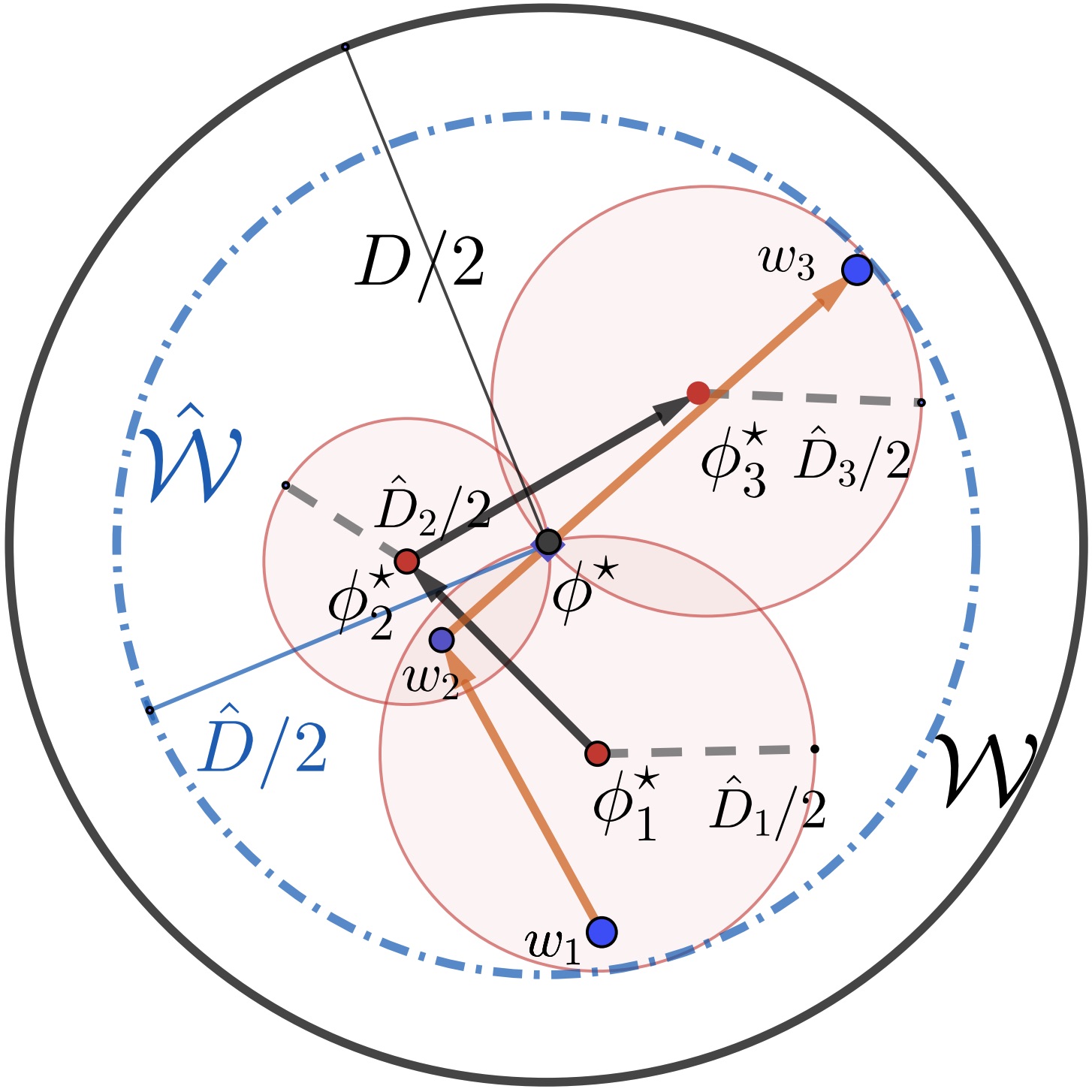

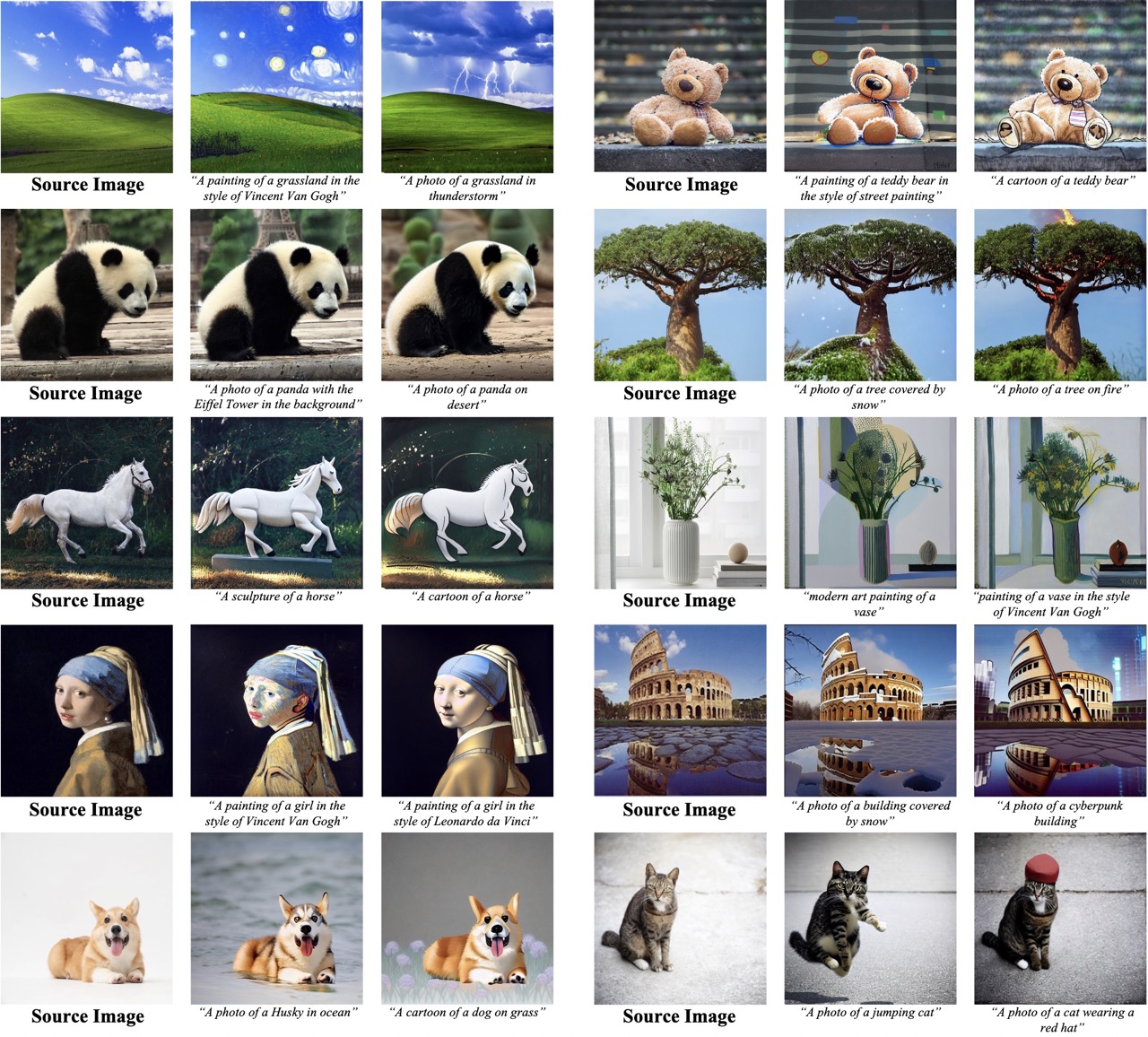

Ligong Han†, Yinxiao Li, Han Zhang, Peyman Milanfar, Dimitris Metaxas, Feng Yang. Accepted at International Conference on Computer Vision (ICCV), 2023 [arXiv] [Unofficial Code] [PEFT-SVD] [Project Page] [poster] [bibtex] |

|

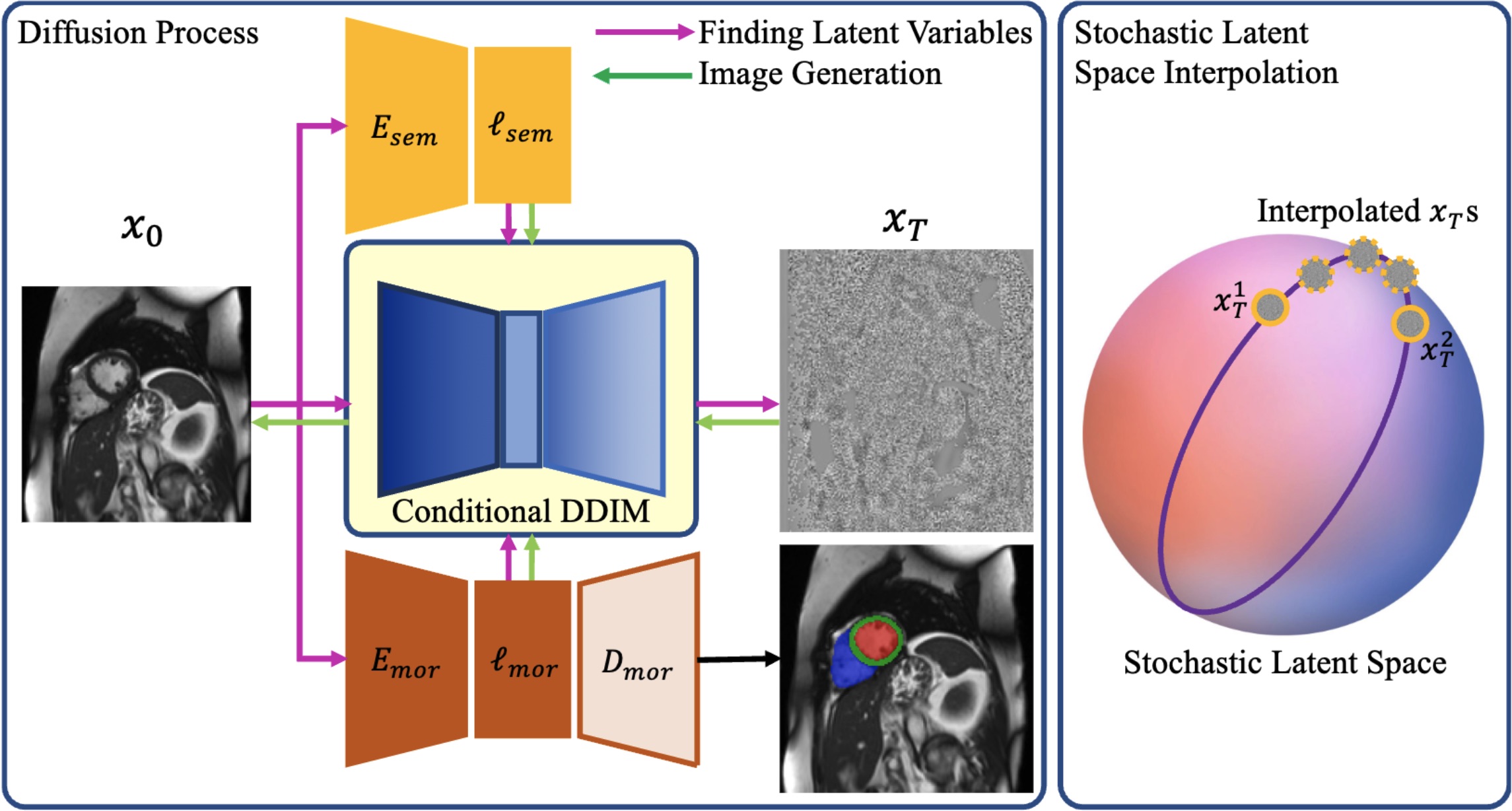

Xiaoxiao He, Chaowei Tan, Ligong Han, Bo Liu, Leon Axel, Kang Li, Dimitris Metaxas. Accepted at International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), 2023 [arXiv] [Github] [bibtex] |

|

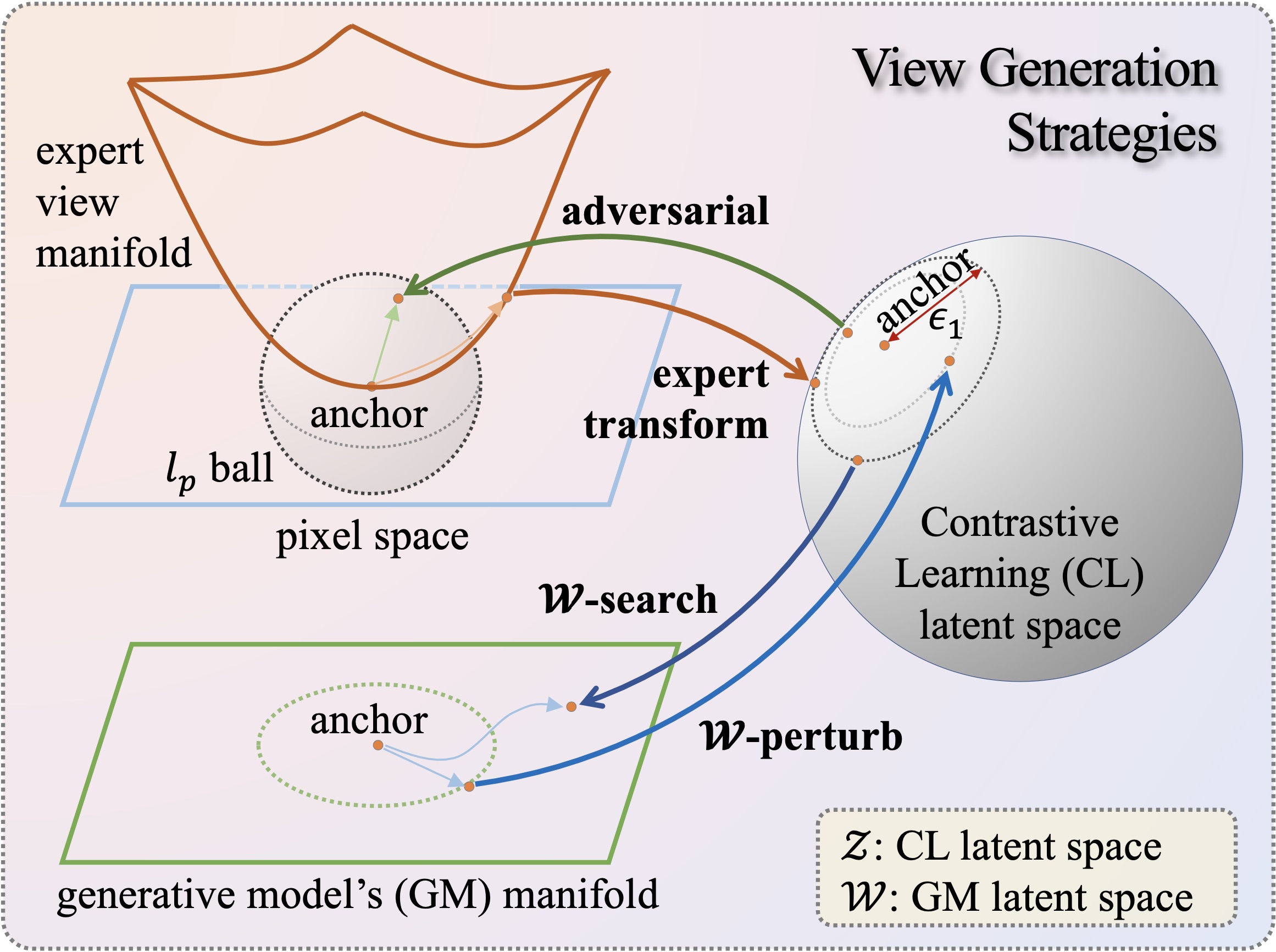

Ligong Han†, Seungwook Han, Shivchander Sudalairaj, Charlotte Loh, Rumen Dangovski, Fei Deng, Pulkit Agrawal, Dimitris Metaxas, Leonid Karlinsky, Tsui-Wei Weng, Akash Srivastava. Accepted to Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2023 [arXiv] [poster] [Github] [bibtex] |

|

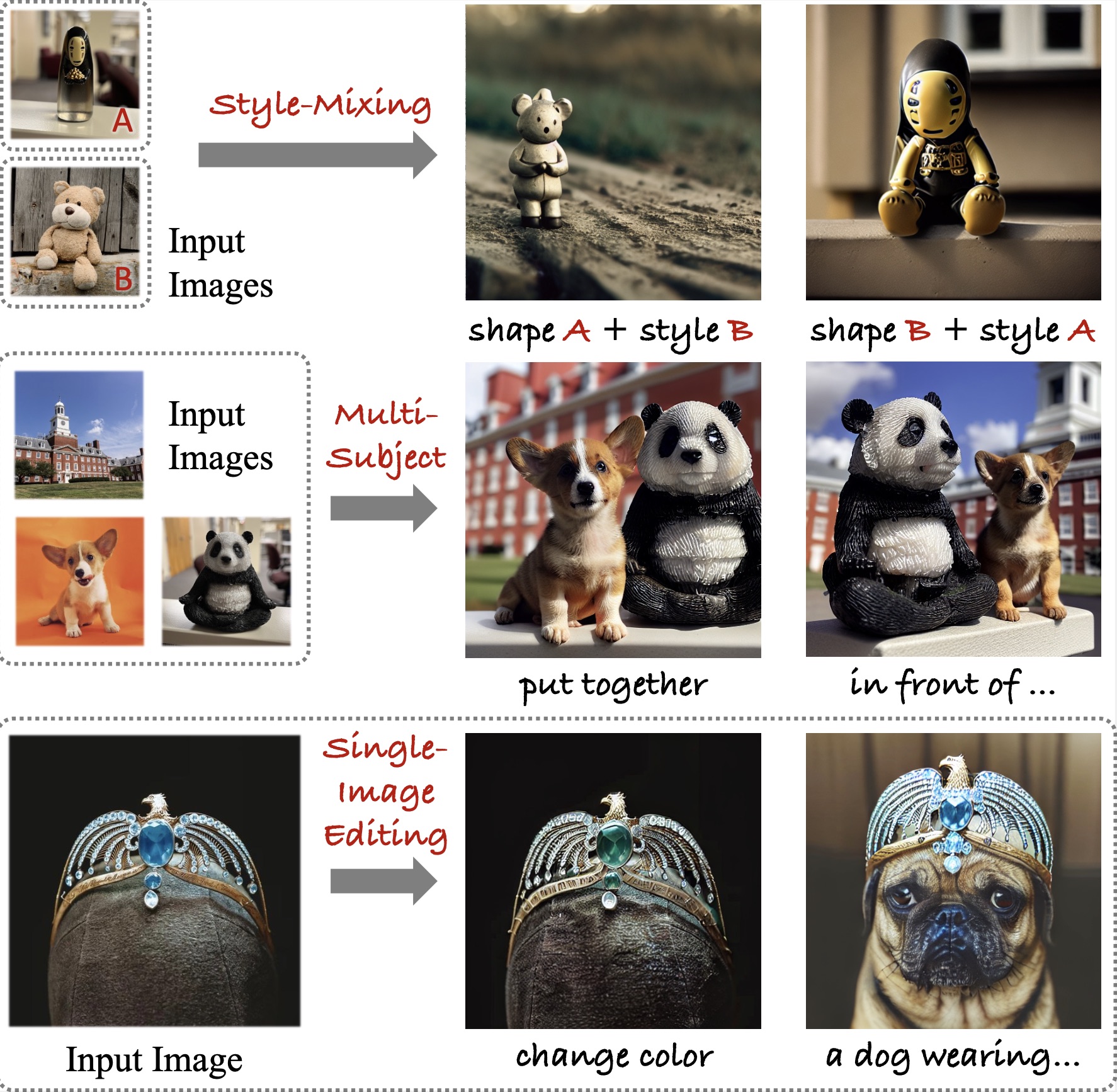

Zhixing Zhang, Ligong Han†, Arnab Ghosh, Dimitris Metaxas, Jian Ren. Accepted to Conference on Computer Vision and Pattern Recognition (CVPR), 2023 [arXiv] [Github] [Project Page] [bibtex] |

|

Anastasis Stathopoulos, Georgios Pavlakos, Ligong Han, Dimitris Metaxas. To appear in Conference on Computer Vision and Pattern Recognition (CVPR), 2023 [arXiv] [code & data] [Project Page] [bibtex] |

|

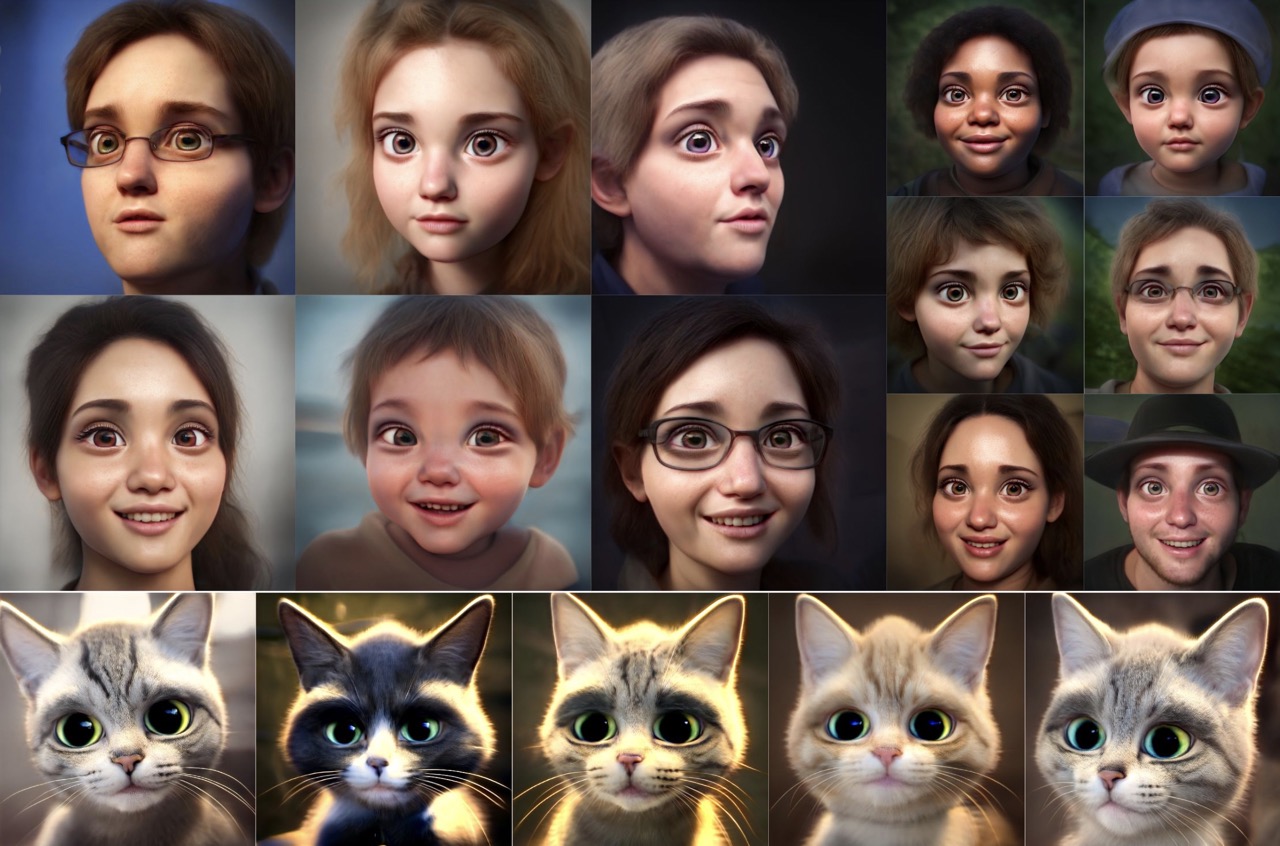

Kunpeng Song, Ligong Han, Bingchen Liu, Dimitris Metaxas, Ahmed Elgammal. Accepted at Winter Conference on Applications of Computer Vision (WACV), 2024 [arXiv] [Github] [Project Page] [bibtex] |

|

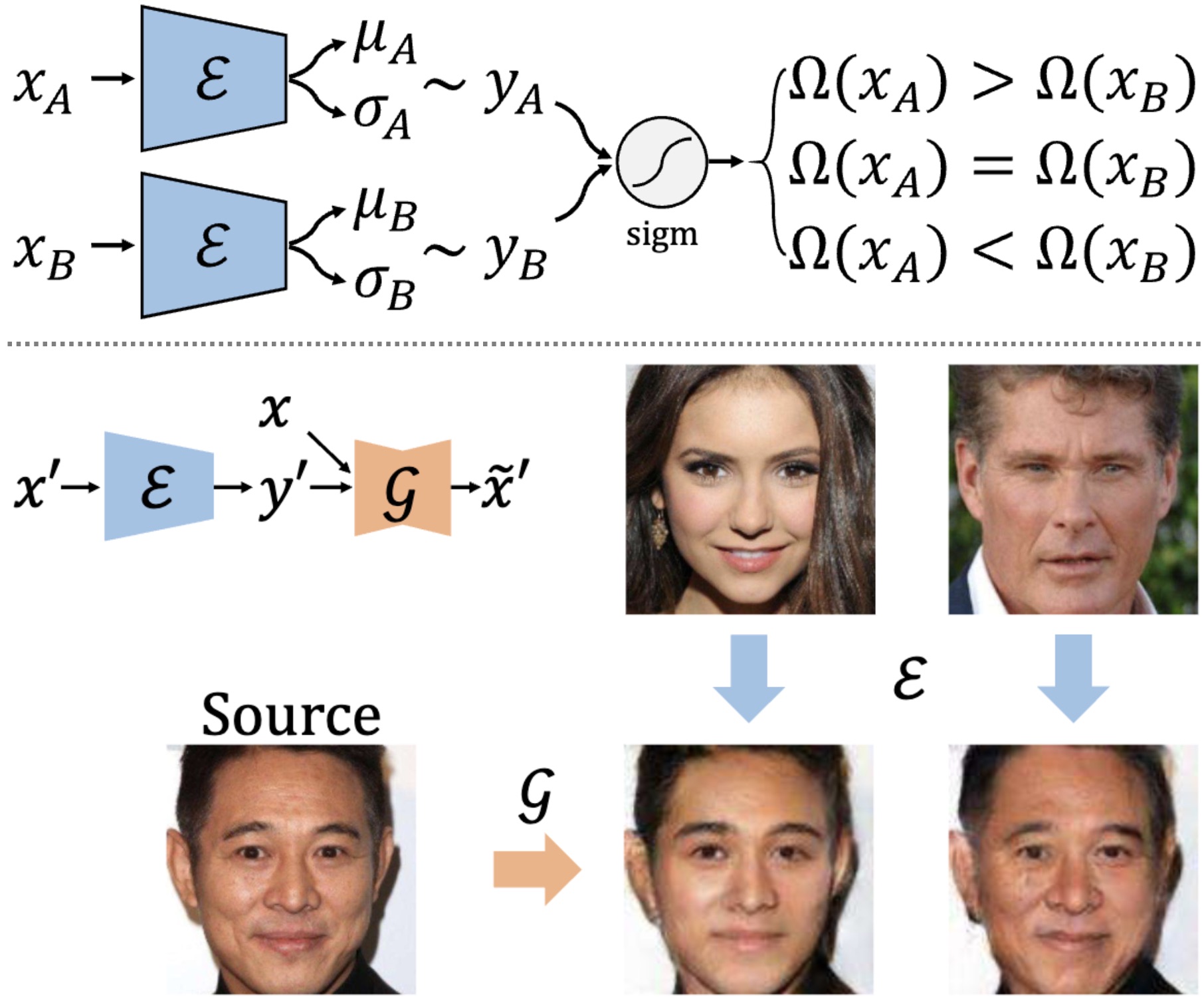

Ligong Han†, Jian Ren, Hsin-Ying Lee, Francesco Barbieri, Kyle Olszewski, Shervin Minaee, Dimitris Metaxas, Sergey Tulyakov. Accepted at Conference on Computer Vision and Pattern Recognition (CVPR), 2022 [arXiv] [poster] [Github] [Project Page] [bibtex] |

|

Ligong Han*†, Sri Harsha Musunuri*, Martin Renqiang Min, Ruijiang Gao, Yu Tian, Dimitris Metaxas. Accepted at Winter Conference on Applications of Computer Vision (WACV), 2022 [arXiv] [poster] [Github] [bibtex] |

|

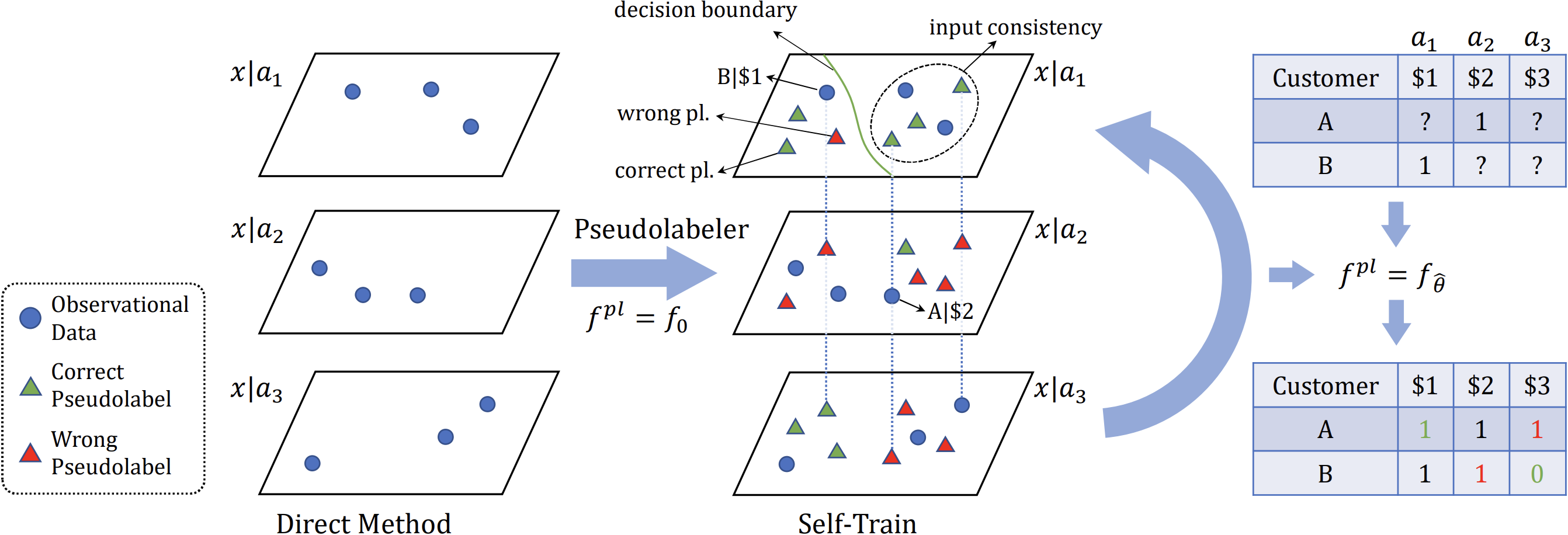

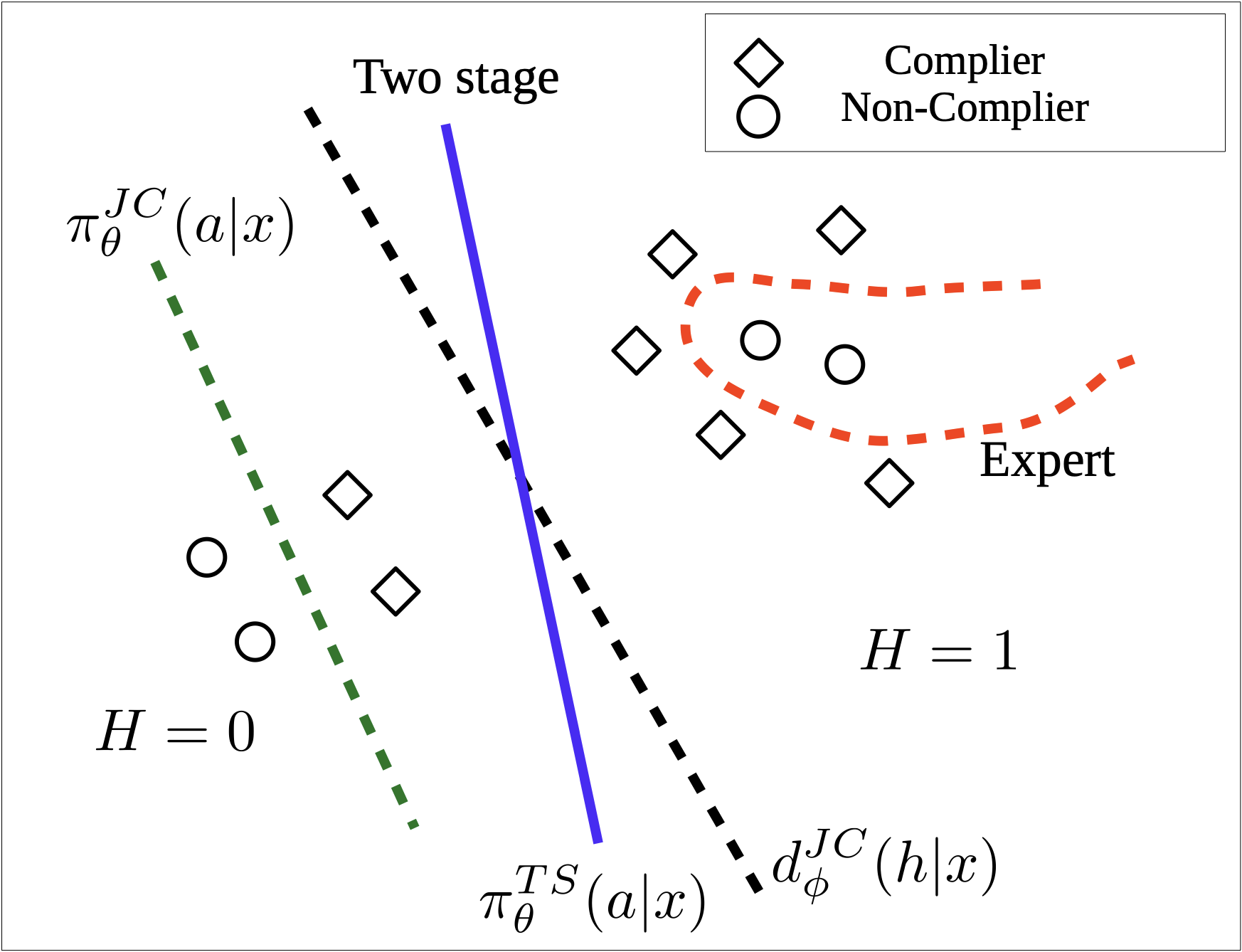

Ruijiang Gao, Max Biggs, Wei Sun, Ligong Han. Accepted to AAAI Conference on Artificial Intelligencen (AAAI), 2022 [arXiv] [Github] [bibtex] |

|

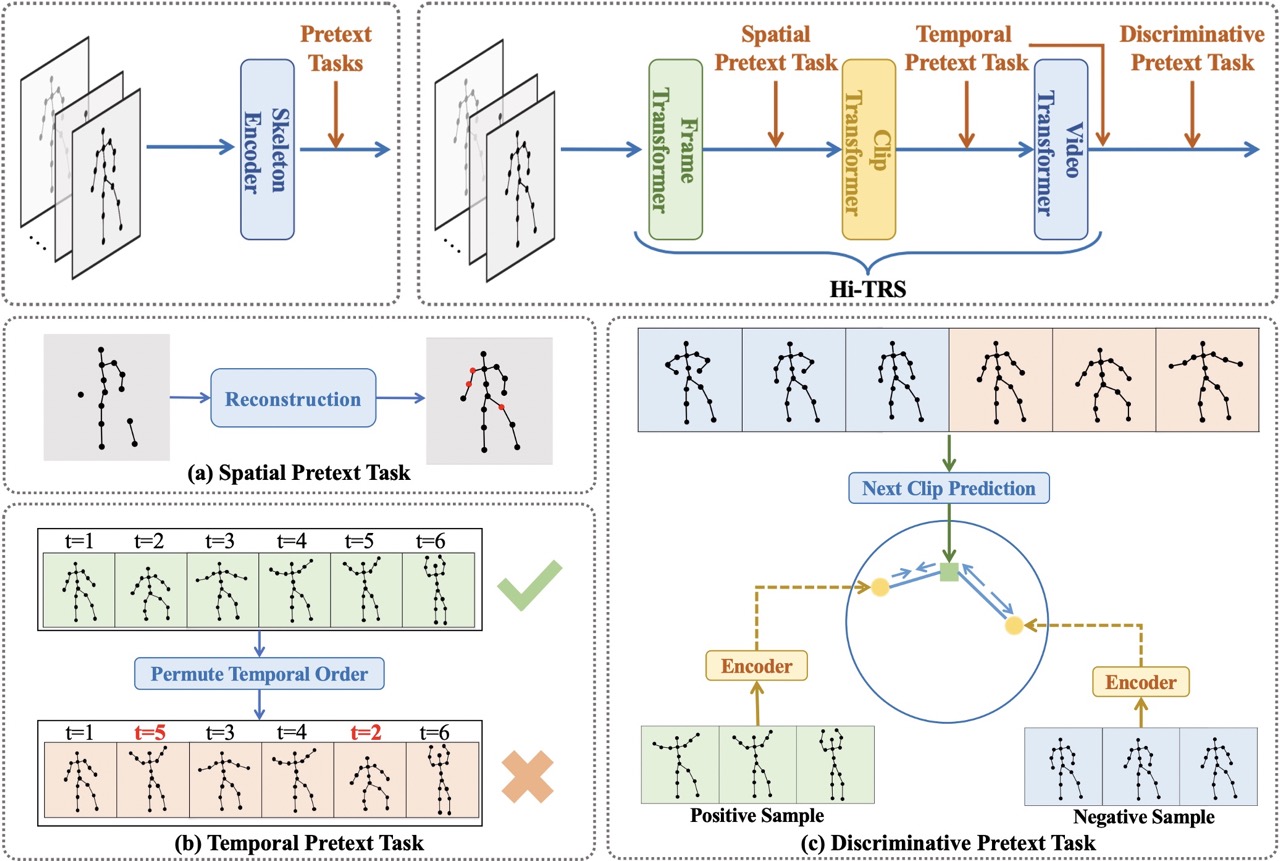

Yuxiao Chen, Long Zhao, Jianbo Yuan, Yu Tian, Zhaoyang Xia, Shijie Geng, Ligong Han, Dimitris Metaxas. Accepted to European Conference on Computer Vision (ECCV), 2022 [arXiv] [Github] [bibtex] |

|

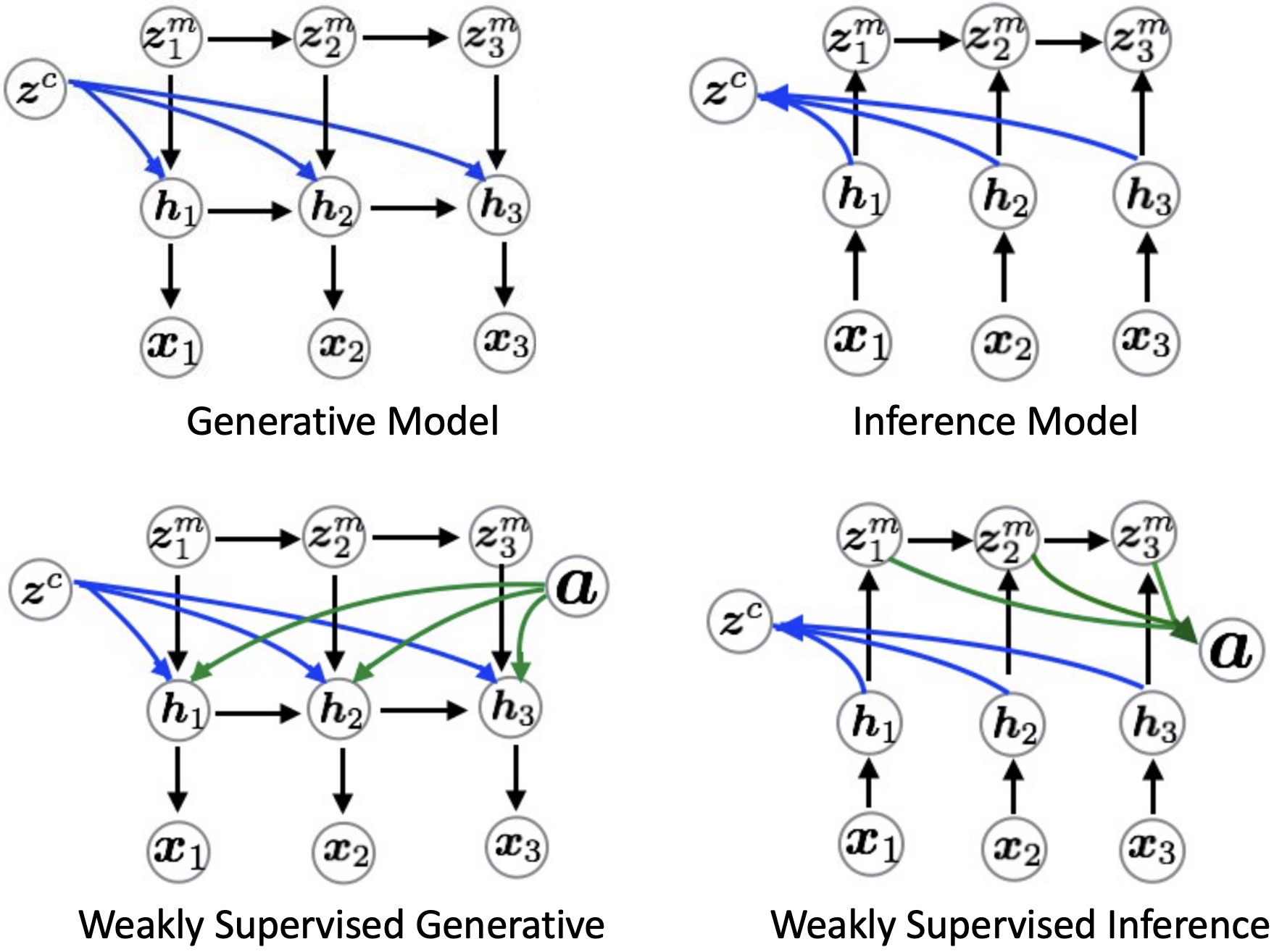

Jun Han*, Martin Renqiang Min*, Ligong Han*, Li Erran Li, Xuan Zhang. Accepted to International Conference on Learning Representations (ICLR Spotlight, scored among top 4%), 2021 [arXiv] [Code] [bibtex] |

|

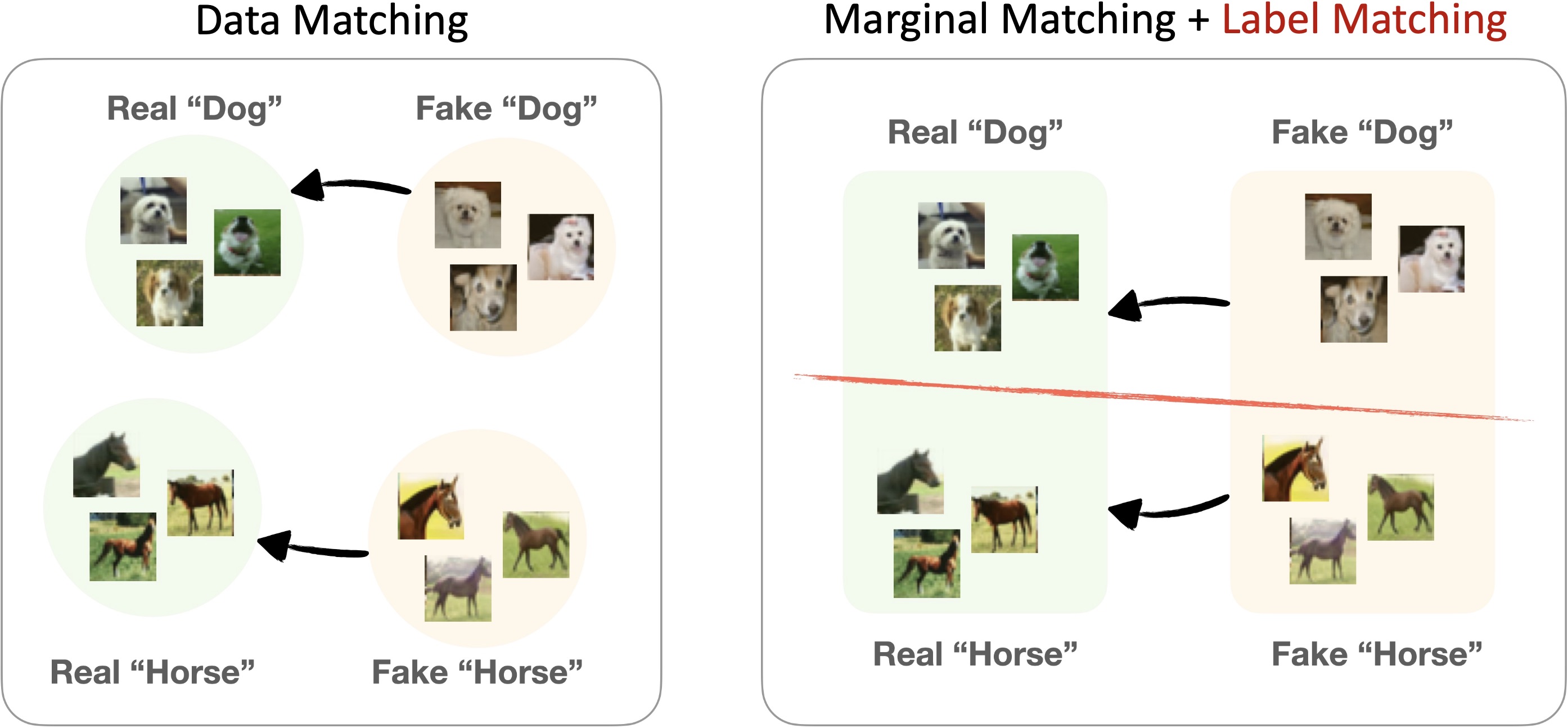

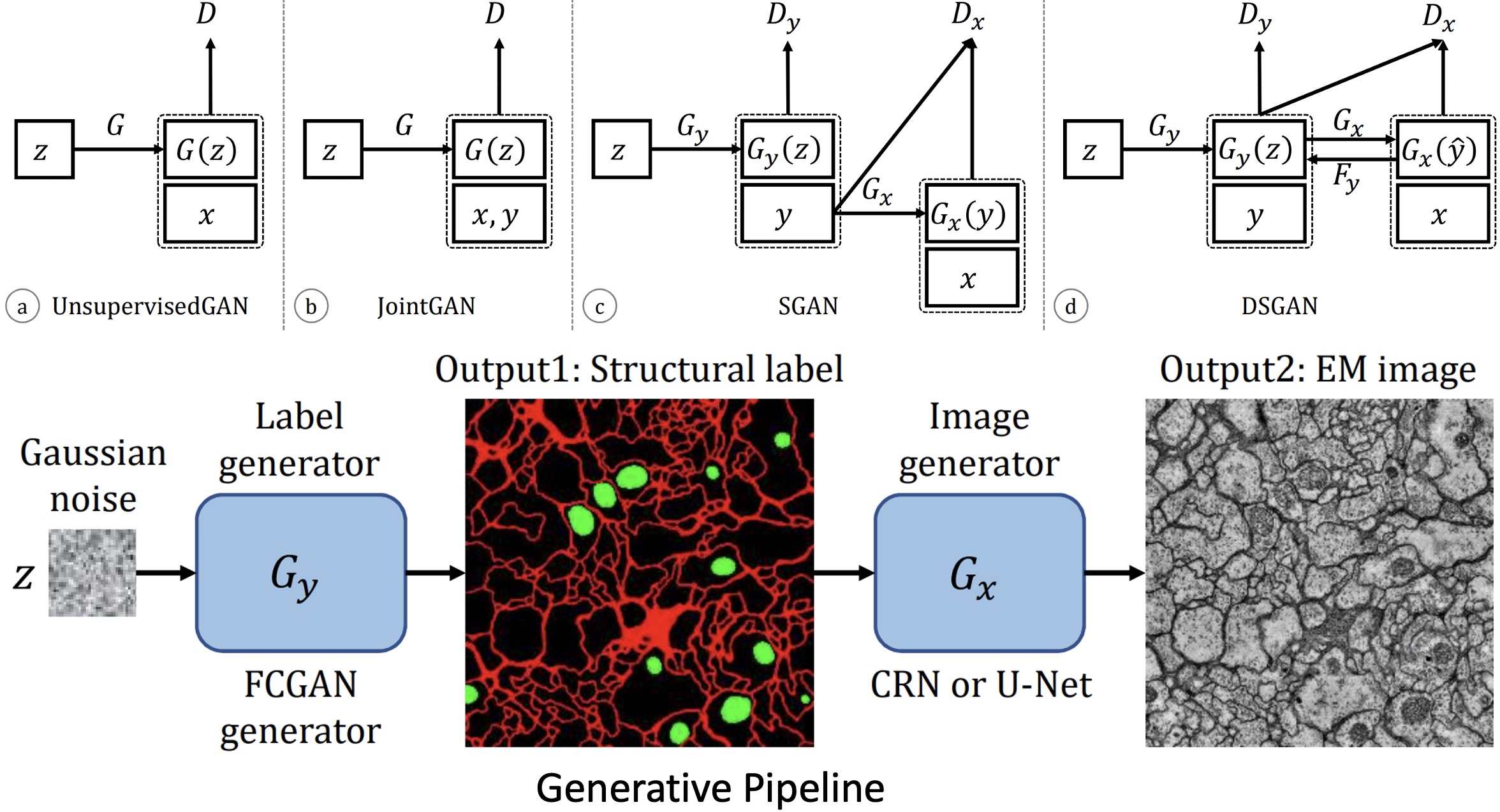

Ligong Han†, Martin Renqiang Min, Anastasis Stathopoulos, Yu Tian, Ruijiang Gao, Asim Kadav, Dimitris Metaxas. Accepted to International Conference on Computer Vision (ICCV), 2021 [arXiv] [poster] [Github] [bibtex] |

|

Ruijiang Gao, Maytal Saar-Tsechansky, Maria De-Arteaga, Ligong Han, Min Kyung Lee, Matthew Lease. Accepted to International Joint Conference on Artificial Intelligence (IJCAI), 2021 [arXiv] [Github] [bibtex] |

|

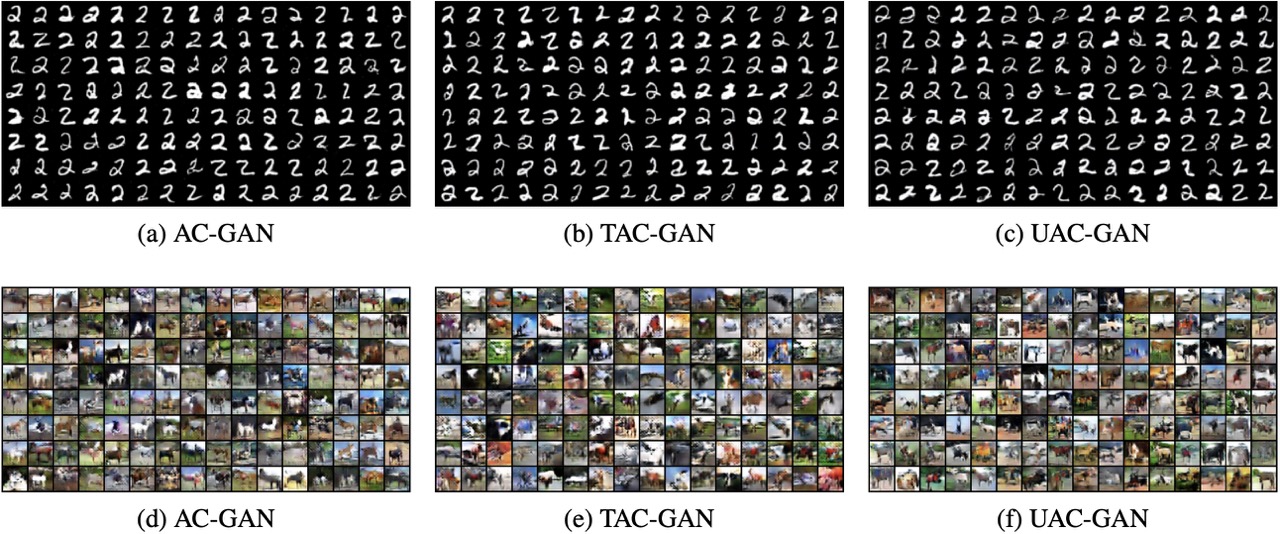

Ligong Han†, Ruijiang Gao, Mun Kim, Xin Tao, Bo Liu, Dimitris Metaxas. Accepted to AAAI Conference on Artificial Intelligencen (AAAI), 2020 [arXiv] [poster] [Github] [bibtex] |

|

Ligong Han†, Anastasis Stathopoulos, Tao Xue, Dimitris Metaxas. Accepted to Conference on Computer Vision and Pattern Recognition Workshops (CVPRW DeepMind Travel Award), 2020 [arXiv] [Github] [bibtex] |

|

Ligong Han†, Yang Zou, Ruijiang Gao, Lezi Wang, Dimitris Metaxas. Accepted to Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019 [arXiv] [Github] [bibtex] |

|

Ligong Han†, Robert F. Murphy, Deva Ramanan. Accepted to Winter Conference on Applications of Computer Vision (WACV), 2018 [arXiv] [Github] [bibtex] |

|

|

|

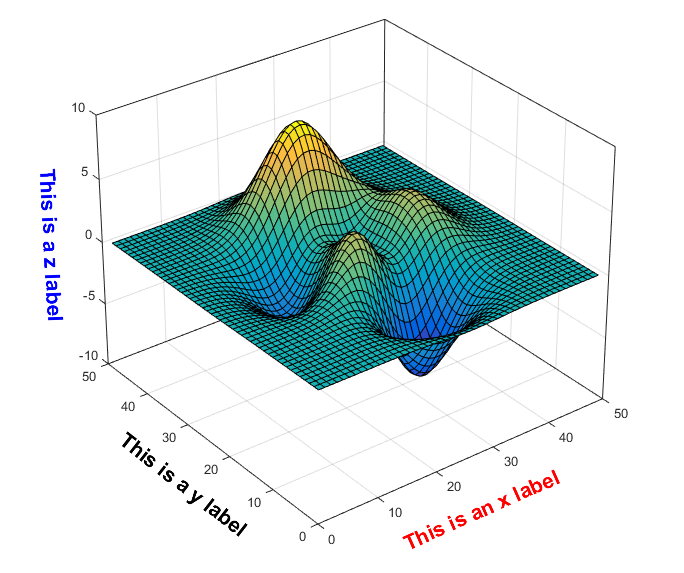

[2015]

[File Exchange] [Github] Align axis labels nicely in parallel with axes in MATLAB (3-D) plots. This file was selected as MATLAB Central Pick of the Week. |

|

Website source from Jon Barron. |